Predicting Potentially Hazardous Chemical Reactions Using Explainable Neural Network

Abstract

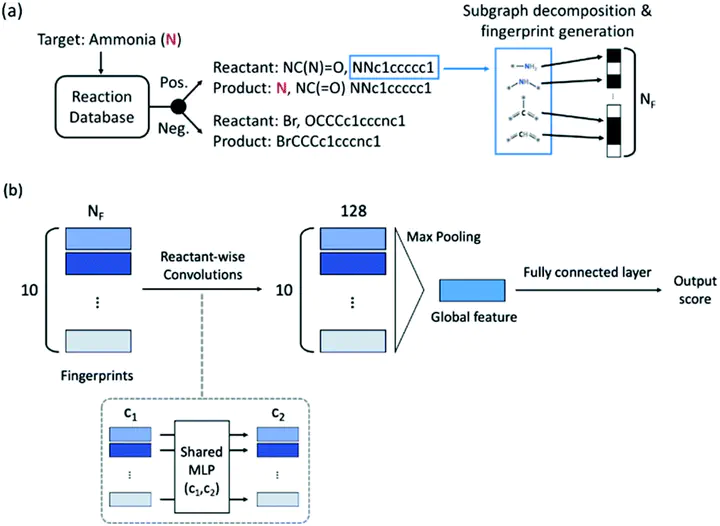

Predicting potentially dangerous chemical reactions is a critical task for laboratory safety. However, a traditional experimental investigation of reaction conditions for possible hazardous or explosive byproducts entails substantial time and cost, for which machine learning prediction could accelerate the process and help detailed experimental investigations. Several machine learning models have been developed which allow the prediction of major chemical reaction products with reasonable accuracy. However, these methods may not present sufficiently high accuracy for the prediction of hazardous products which particularly requires a low false negative result for laboratory safety in order not to miss any dangerous reactions. In this work, we propose an explainable artificial intelligence model that can predict the formation of hazardous reaction products in a binary classification fashion. The reactant molecules are transformed into substructure-encoded fingerprints and then fed into a convolutional neural network to make the binary decision of the chemical reaction. The proposed model shows a false negative rate of 0.09, which can be compared with 0.47–0.66 using the existing main product prediction models. To provide explanations for what substructures of the given reactant molecules are important to make a decision for target hazardous product formation, we apply an input attribution method, layer-wise relevance propagation, which computes the contributions of individual inputs per input data. The computed attributions indeed match some of the existing chemical intuitions and mechanisms, and also offer a way to analyze possible data-imbalance issues of the current predictions based on relatively small positive datasets. We expect that the proposed hazardous product prediction model will be complementary to existing main product prediction models and experimental investigations.